Digital advertising relies on serving the right ad to the right audience in real time. The better we get at that, the better we do—that’s the industry mantra, and for a reason.

We’ve witnessed vast improvements in the volume and accuracy of customer data, and worked in increasingly sophisticated ad platforms.

Machine learning and AI improve the ability to recognize patterns and pull the right responses from a database. Anyone who doesn’t learn to use the AI features of ad platforms now will be left behind. Many already have been.

This means rethinking attitudes, habits, and methods—sometimes profoundly. It ushers in a new era of best practices.

While the space is still evolving, we’ve been in it for a while. These are four keys to take advantage of platforms’ machine-learning capabilities in your campaigns.

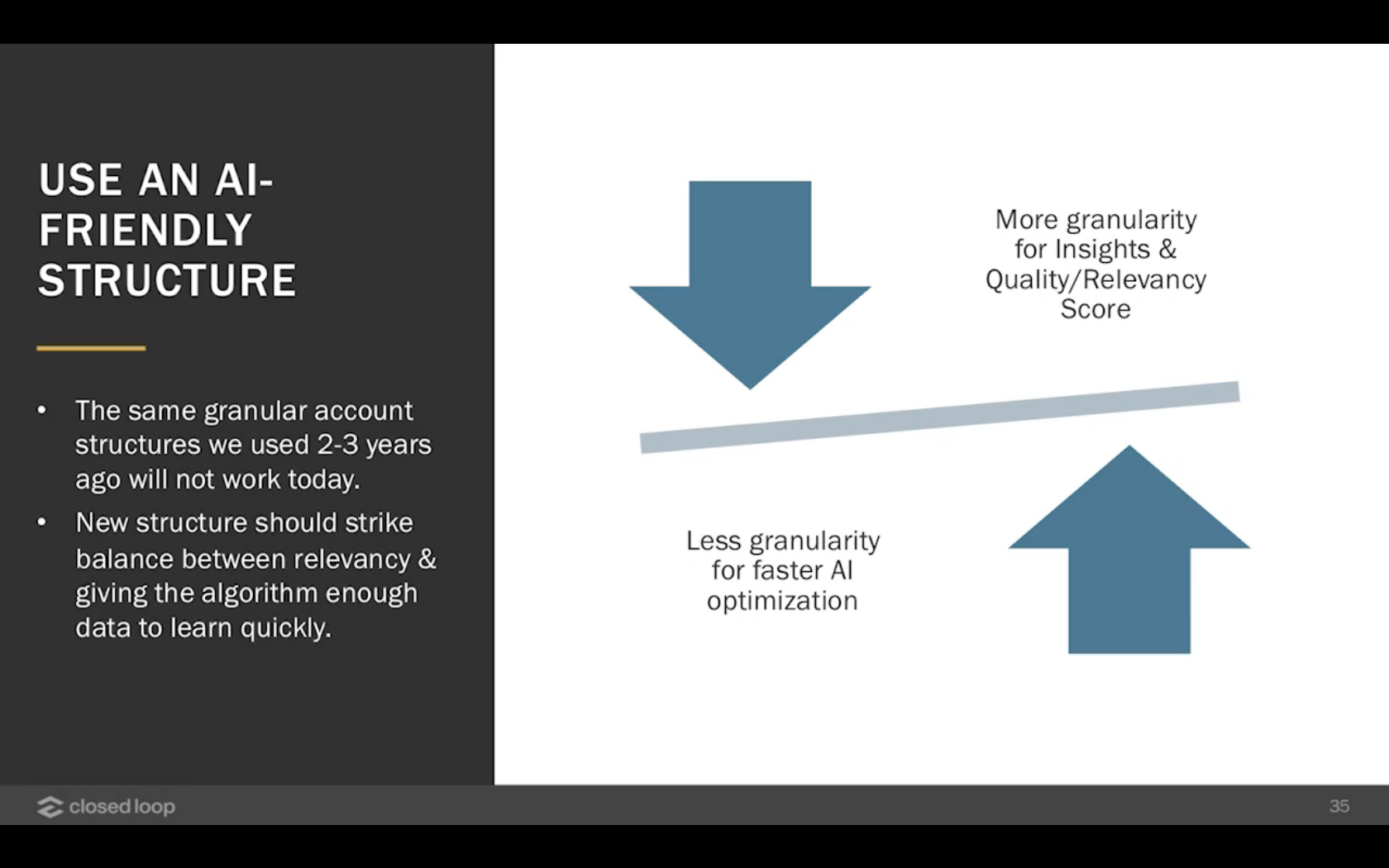

1. Use an AI-friendly structure.

In the past, advertisers focused on granularity. “We can account for every penny of spend and show provable ROI”—that was the aim. Now, that same highly granular structure actually limits campaign success.

We have to strike a new balance:

On one side, we have the traditional rewards of higher granularity: higher quality/relevance scores, better insights, and so on. We still want these, but the route we take to them has changed because we’re no longer doing the heavy lifting.

Instead, we’re teaching an AI system to deliver these results for us. All major ad platforms—especially Google and Facebook—use AI to target ads, manage bidding, and budgeting and, increasingly, to optimize creative. To do that quickly and at scale, we often need to sacrifice some granularity. That gives us a data set that can train the AI system faster.

When the balance is struck correctly, results improve drastically—the AI system is more responsive and attentive to data than a human could ever be. What we lose in granularity we make up for in overall accuracy and effectiveness.

Having said that, we still want enough granularity to get some signals about what’s working and what’s not. Selecting destinations is not what AI excels at—we still need to do that.

Take Facebook. Back in 2017, Facebook recommended a highly granular approach:

By 2019, things looked very different—fewer campaigns, fewer ad sets:

The rationale for this contraction in ad campaigns and sets—this granularity shrinkage—is that the goal has shifted from “control every penny of spend ourselves” to “rapidly train the AI.”

In the learning phase of AI implementation, you see performance drops of ~30%. Many of the gains we once enjoyed from granularity are eaten at the expense of training the AI. It’s crucial to reduce the duration of this learning phase, which is when you endure the worst performance.

It’s gut-churning to wait it out. But exerting more control (via enhanced granularity) only constricts the incoming data and prolongs the learning phase.

Typically, this updated approach does yield better results. Initially, however, we found it extremely counterintuitive and had to run many experiments to be convinced of its effectiveness.

How does it look outside Facebook? We do our own visualizations, which I’ll introduce to make my point. The size of each box represents spend; color represents ROI—red is bad, blue is good.

If you’d like to see your ad spend visualized this way, check out our free health check app. You can see how your spend looks and what you can do to improve.

What you see in this visualization is a hyper-granular (SKAG) campaign structure in which a small campaign size seems to correlate with poor ROI (lots of small red boxes). The person who crafted this campaign clearly wanted maximum control.

But they’re not getting enough data from smaller campaigns to optimize them in a meaningful way. They’re probably telling themselves that this is by design—that it’s part of a “portfolio management” strategy in which you accept lower performance from a (large) number of campaigns but balance that with higher returns from other areas. It sounds good in principle (and actually used to work that way).

The problem? This isn’t a machine learning–friendly structure. The extreme granularity, in many cases, doesn’t just prolong the learning phase but keeps AI from ever leaving it. With so many variables, the system gets stuck in the learning phase, with profitability forever out of reach.

In fact, over 50% of the ad groups in this visualization had not earned a click in three months but were actively running. Of the remaining 50%, most got no more than five clicks across the same three-month time window—and showed no prospects for growth.

Consolidating spend in areas with a large ROI—and pulling the plug on thousands of tiny, go-nowhere ads—makes sense, with or without AI. But it’s also far better to sacrifice some gains from increased granularity and instead focus on getting the AI to full efficacy faster.

We restructured that campaign to maximize the efficacy for AI, and you can see the difference:

There are still a few smaller ad groups. This isn’t just three big fields. It’s a continuum—not maximum or minimum granularity with nothing in between. But axing thousands of minute, ineffective ads that were impossible to manage trained the AI much faster.

What’s crucial is the degree of granularity in the top 70% of spend volume, which you can see if we stack these two charts on top of each other:

The area outlined in yellow in the top image contains just under 300 ad sets; the one below, 23. Removing low performers from the top 70% led to a radical increase in overall performance.

2. Optimize for the right metrics.

The practical necessities, as well as the advantages, of machine learning have changed how we think about the metrics we optimize for. One of the challenges here is, again, to find the correct balance.

This time, we’re trying to balance funnel depth with data volume. We need enough data to give the algorithm something to learn on and optimize against, but we also want to be as far down the funnel as possible.

However your “funnel” actually functions—most look like maps of some kind of futuristic metro more than a linear funnel—it’s wider at the top.

Across industries, the conversion rate from MQL to SQL is between 0.9% and 31.0%. Conversion from SQL to customer averages around 22%. But even these sharp bottlenecks pale in comparison to the top of the funnel. The top Google Ads accounts have conversion rates of 11.45%, but the median conversion rate is 2.35%.

The result? We have many more data points at the top of the funnel, so it’s easier to train the AI on that bigger data set. However, success there is not only less valuable but potentially misleading. You can be winning on paper and losing in real life if you’re optimizing for the wrong metric. And TOFU metrics like lead volume or CPL can often be the wrong metrics.

Here’s what we’ve learned trying to find the right balance. A client had been optimizing for CPL/lead volume, shooting for max lead numbers with a $50 per lead cap. We were getting good results.

We were also getting a clear view of which leads were worth buying and which weren’t. We had some leads that were nice leads (i.e. head terms in our space) but too expensive—$100 each in some cases. Why would we spring for those when we had tons of great leads that were $50 or less?

Then, we switched to a metric a couple of steps deeper into the funnel and started optimizing for SQLs. Here are the before-and-after stacked bar charts, with each color area representing a keyword theme:

Our low-cost leads that were a “great” value weren’t converting at a worthwhile rate—they were about 40% overpriced. Higher-cost leads were becoming lower-cost SQLs; they paradoxically both cost more money and were cheaper. We maxed those out and saw a sharp increase in profitability.

This insight changed how we allocated spend. SQL volume increased nicely even though lead volume dropped in some cases. That’s hard for some clients to accept at first, but given the choice between more leads and more qualified leads, they opted for the latter.

We never would’ve seen this without optimizing to that lower-funnel metric, but we still wouldn’t have seen it if we weren’t measuring and correlating across the funnel. Measure and optimize multiple metrics, multiple interconnected customer behaviors and actions—not just one. Feeding information from down-funnel to higher-funnel helps targeting, so the leads are better quality to begin with.

Tracking multiple metrics also gives you greater accuracy in the same way that triangulating on distant objects tells you new information, like distance and elevation. Track two, three, five customer data points, and you develop new insights. This isn’t possible without AI because of the enormous number of calculations involved, of course.

Correlating deeper conversion data back to campaign data is a technical challenge, so we’re about to get super geeky with this. Here’s how it’s done with Google:

You patch through the Google Click ID (gclid), let’s say, to your CRM, and record it in the customer database associated with that lead. Then, you feed it back into Google when you’re making ad buy decisions, letting you see which creative, audience, geography, and other variables improve deep-funnel metrics.

But what happens if you switch from optimizing one metric to another? How does your AI implementation manage a new goal?

Managing your AI implementation when KPIs change

What’s weird about this is that it’s a process you kind of feel your way through. When we talk about data-driven marketing, “feel” isn’t a verb you normally hear. But this really is a process of feeling your way to the right outcome. We think of it as “riding the AI beast,” which is a very different and often more intuitive process than “engineering the best ROI.”

Here are a couple of examples. A client wanted to move from optimizing for a top-of-funnel button click to a middle-of-funnel sign-up. We changed the optimization metric in Google and Facebook—and had a very different experience across the two ad platforms.

We’re not saying which is which so as not to offend anyone. And we’ve seen wonky results on both. The point isn’t that one platform is better than the other. The point is that you need to be prepared for unexpected outcomes when dealing with AI.

Here’s the first ad platform:

All we did was flip the switch. We told the AI, “Stop doing that, and start doing this,” and you can see where that happened. Lead volume spiked while CPL dropped. Easy!

Which is cool—when it works. But it doesn’t always come out that way. Here’s the same project, same parameters, but different ad platform:

This is what it looks like when you can’t just flip the switch. In weeks four and five and, then, in weeks eight and nine, we changed target bids and budgets to boost volume.

However, the algorithm didn’t have the right signals to meet the new bids and budgets, so it simply dropped the volume to almost zero. We tried a bunch of different things until we finally found a combination that let the algorithm function efficiently.

We were riding the beast here, and this likely will be your experience at least some of the time, too.

3. Let AI determine the creative fit.

Is this really a wise idea? We’re not necessarily talking about letting AI actually do creative, but we can use it to fit creative to the audience, sometimes in innovative ways. Let’s run through some examples, starting with responsive search ads.

If you’re not running responsive search ads, I urge you to reconsider—this is where the whole space is going. Google and Facebook already match the ad to the customer automatically. There’s no such thing as a “winning ad” anymore.

When you attempt to manipulate the algorithm to produce a traditional winning ad, you undermine overall performance, sometimes drastically. Even if you manage to produce a winning ad, you have no control over whether it’s shown—the platform makes that decision based on the audience and buyer journey stage.

If you’re not experienced with responsive ads, they work like this: You write pieces of an ad, and then the algorithm assembles them, matching each piece to the audience.

You can wind up with thousands of variations. It doesn’t make sense to have one definitive combination—a winning ad, as we used to think of it. Here’s how that looks in action on Google Ads:

Same keyword, same advertiser, same campaign, but two different recipients at two different stages of the buyer journey. That means different ads.

This lets us step back from trying to figure out every tiny detail and refocus on strategic messaging. That can and should still be tested and based on data, we just don’t need to track small-scale, tactical stuff so much.

The downside is that we lose some control. I don’t get a clear beat on the ROI of specific ads, and, obviously, I don’t get a single winning ad. Instead, I get this:

So I come out of these campaigns with a feel for overall performance but not the granular insights we’ve come to expect, which circles back to our need to relinquish some control to get this technology to work for us.

The upside is that you can really improve your quality scores. And we’re finding that you can access additional inventory that wasn’t available before with discrete ads.

Here’s what we saw in this case:

Those are giant, not incremental, changes. Assuming you’re doing the basics properly, those kinds of wins are hard to come by with traditional expanded text ads. But with AI steering creative fit, they’re what you should expect.

Responsive ads tend to optimize mostly to CTR, and there’s still no really great way to test them, but they have a positive impact on quality score if you add them to each ad group, and they’re worth using in and of themselves.

Here’s how I recommend getting the best out of them:

Use drafts and experiments to test variables.

Add additional responsive search ads in each ad group.

Use the combinations report to identify segments driving the most and least impressions.

Remember that responsive search ads recombine and serve ad elements in real time, so you’ll never be able to put together a true apples-to-apples comparison.

Don’t forget to use the ad strength indicator as a baseline.

4. Follow general guidelines for AI in advertising.

Whatever the specifics of your campaigns, goals, and tools, some rules are broadly true. That’s because they’re dictated by the nature of the available technology and the wider landscape—we don’t have a lot of choice about them.

Structure

Keep all match types together, except in extreme cases where volume permits separation. Structure campaigns in a machine learning–friendly way with enough conversions per ad set for the algorithm to learn quickly—ideally 50 per ad group per week on Google, 10 per ad group per week on Facebook.

Measurement

Optimize for the right metric, one lower in the funnel but with enough volume.

Creative

Use machine learning to reach the right customer with the right ad at the right time. Write different messages to address different phases of the buyer journey.

Audiences

Increase audience size. Increase the lookalike audience percentage from 1–5% to 5–15%. Where interest and behavior targets significantly overlap, combine them. Use exclusions to minimize audience overlap.

Conclusion

Putting AI to work in advertising means handing off to machines the tasks that they can do better than we ever could. When it comes to multivariate pattern recognition, machines are already significantly superior to humans.

If you want to match ad elements to the customer journey—or you want to drive radical improvements in CTR and lead price by optimizing for lower-funnel metrics—machines should absolutely be your first choice.

But when we do this, we can’t just give machines part of the job we’re already doing and then carry on as normal. A car is a lot faster than walking, but you have to build a road for it to drive on; machines do this stuff faster and better than we do, but we have to facilitate that work.

There are tasks that machines are either absolutely terrible at or can’t do at all. A machine can mix pre-existing content into novel combinations based on set criteria; it can’t come up with something new. We’re a long way from push-button marketing.

This is the start—of using machine learning for digital advertising, of exploring a wide-open field, and of establishing best practices to guide our industry’s future.

Read more: feedproxy.google.com