If you’ve ever tried to build an attribution model that wasn’t position-based (i.e. last click, first click, linear, etc.), you might have felt overwhelmed. But customer paths are non-linear and pretty complicated—sooo many things influence conversions.

Most businesses have a lot of data about customer behavior: the devices they use to purchase, ads, competitors’ pricing, keywords, etc. But they can’t make sense of it all.

When you’re working in a multichannel business and a big chunk of conversions happen offline, it gets even worse. You need to prove that your online marketing campaigns drive offline revenue.

Modern purchasing behavior may include:

Pure online. Finding and buying products online.

Pure offline. Examining and buying products in brick-and-mortar stores.

ROPO. Researching products online and buying them offline (a.k.a. webrooming).

Showrooming. Examining products in physical stores and purchasing them online.

If you’re measuring the efficiency of online marketing by looking only at Google Analytics orders, you’ll miss the offline orders when evaluating the efficiency of your campaigns.

Here’s how to take everything into account and build a valid, valuable multichannel attribution model.

How influential is ROPO?

The ROPO effect varies from country to country and category to category. According to DigitasLBi, 88% of consumers worldwide research products online before buying.

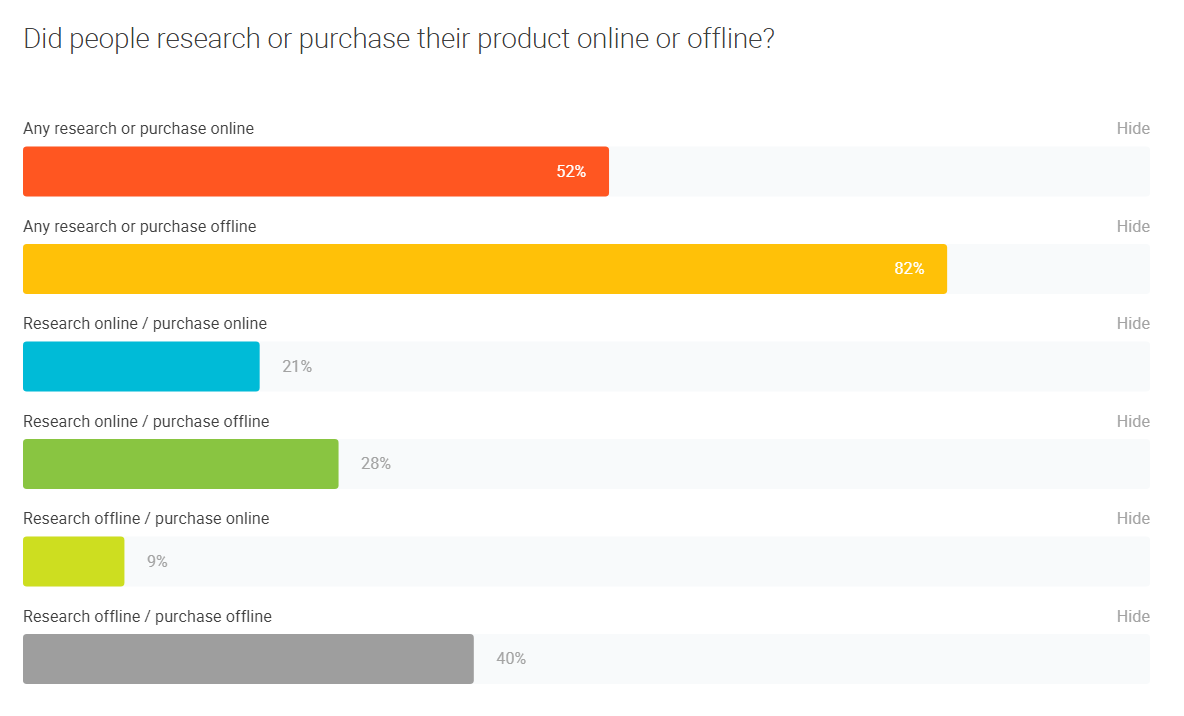

You can find out the percentage of ROPO purchases in your country with Google’s Consumer Barometer:

Google’s Consumer Barometer for the United States shows that 28% of consumers engage in ROPO behavior. (Image source)

Google’s Consumer Barometer for the United States shows that 28% of consumers engage in ROPO behavior. (Image source)

When examining the ROPO effect, traditional brick-and-mortar sales virtually vanish for certain categories. There are also categories in which they’re essential. In our experience, product categories where the ROPO effect is significant include:

TVs/monitors;

Mobile phones;

Audio equipment;

Digital cameras;

Hardware.

What drives ROPO behavior?

Shoppers want to view the item up close.

Need the item sooner than it can ship.

Want to avoid shipping fees.

Need in-person advice.

How ROPO affects attribution

Marketers struggle to consider all the touchpoints and include all informational sources, especially as data is stored in different systems and processed and updated at different speeds. Trying to bring all this data together, clean it, and merge it can feel like playing the contraption below.

You’re not alone here, at least according to Strala. Their research on the state of marketing measurement found that only 11% of marketers feel “very confident” in the accuracy of their attribution models.

And, according to KPMG, only 35% of surveyed organizations have a high level of trust in their organization’s use of data analytics.

Nearly a quarter of KPMG respondents had “limited trust or active distrust” in analytics data. (Image source)

To bridge the gap between scattered data and actionable analytics, follow these steps.

8 steps to create a multichannel attribution model

Step 1: Define your questions

Start with hypotheses, and formulate questions you want to answer. For instance, say you want to understand what share of your offline sales were influenced by online ad campaigns. Or, you may want to know at which stage in the funnel it’s best to encourage customers to make a purchase (and the best channel to do so).

Once you have those questions written down, prioritize them. Prioritization decreases the number of subsequent tasks and helps you start building an MVP version of your attribution dashboard.

Step 2: Find the data

Identify the most critical data sets in your company. These can be ad platforms (Google Ads, Facebook, Twitter, etc.), email marketing platforms, call tracking systems, mobile analytics systems, CRM and ERP systems, etc.

Then, define the fields that will act as keys to link data from different sources in a single view.

The biggest problem companies usually run into is not being able to identify the same users across online and offline channels. Here are six ways to connect the dots between online and offline:

1. Use online apps to lead customers offline. VOX, a Polish furniture and decorations producer, encouraged online customers to schedule face-to-face consultations in the company’s brick-and-mortar stores.

To do that, they built an app called VOXBOX in which customers could design their own furniture. After users design furniture, the app invites them to a physical store for a consultation. Projects are automatically sent to store assistants, which makes consultations easier for customers and staff.

The desktop interface for the VOXBOX app.

The desktop interface for the VOXBOX app.

VOX customers can design a home interior at their own pace in the app, take time to choose the best solutions, then schedule an appointment with a consultant who can implement their ideas.

2. Use beacons—tiny devices that run on BLE (Bluetooth Low Energy). You can place beacons in your brick-and-mortar store, office, or salon.

Then, wait for customers who have your app installed on their mobile devices. Beacons communicate with the app, sending their ID, signal strength, and additional data.

Using this technology, you can see areas of a store that users visit and even the products they pick up. Macy’s is experimenting with a mobile app that automatically checks in customers when they enter a Macy’s store and then displays information, via beacons, based on their online and offline visits and buying behavior.

3. Offer loyalty cards that must be activated online with an email address and are connected to a user ID, phone number, etc. These can be a great help in tracking the customer journey.

4. Provide lead magnets in exchange for data. This is a common strategy to acquire and nurture leads, using things like:

“Special offer” emails that customers can subscribe to;

Promotions and contests that can be entered by signing up and providing contact information.

They can also engage past purchasers to learn more about repeat buyers:

An extra month of warranty for registering the receipt online;

Video manuals and advice on how to use a product in exchange for an email address.

5. Use soft conversions to measure intent with event tracking. You can use soft ROPO conversions to get an idea of the ROPO effect. Soft ROPO conversions are interactions an online visitor has with a website and/or ad that indicate the likelihood that they’ll actually visit a store.

For example, a visitor who enters their postcode in the store locator and views the opening hours is likely to visit the store.

6. Connect activity on the website, applications, CRM, and POS with a unique user ID.

Step 3: Decide on your infrastructure and approach

When it comes to infrastructure, you can choose from an on-premise solution, a cloud-based solution (such as Amazon Redshift or Google BigQuery), or a hybrid solution.

My personal choice is Google BigQuery:

Easy to start using;

Ready-to-use integrations;

Super powerful (processes terabytes of data in seconds);

Guarantees 99.9% uptime;

Cost-effective;

Secure;

Strong supporting infrastructure (Compute Engine, Data Prep, etc.).

Next, decide on your approach for architecting your data:

Data lake. A repository of data stored in its raw format.

Data fabric. A system that provides seamless, real-time integration and access across the multiple data silos of a big data system.

Data hub. A homogenizing collection of data from multiple sources organized for distribution, sharing, and often subsetting and sharing.

According to recent research by McKinsey, only 8% of data lakes have ever moved from “proof of concept” to production. But don’t let this demotivate you—if you follow the steps below, your chances of success are pretty high.

Do you want to build or buy a service to connect all your systems? If you build your own solution, it will correspond 100% to your needs, but it will also cannibalize your resources. Implementing it can take longer than expected, especially if you don’t have a dedicated project manager and experience developing internal tools. You’ll also have to support the product yourself.

Luckily, the market is flooded with decent connectors, such is Stitch or Funnel.io, that can help you get up and running and decrease time-to-value. My recommendation is to buy when possible and save your internal resources for something more valuable.

Here are a couple of examples of how to build your analytics infrastructure and connect the dots.

Darjeeling case study

Let’s take a look at Darjeeling, a top retailer in the women’s lingerie market. There are about 155 Darjeeling stores all over France, and more than 8.7 million visitors come to those stores every year. The company sells over 5 million items a year, with an annual turnover of €100 million.

Darjeeling uses different systems to collect, store, and process data. User behavior data is sent to Google Analytics, while data about costs and order completion is collected in the company’s CRM.

The data in the CRM is in French, while the data collected in Google Analytics is in English. The data structure is also different between the two systems. To evaluate the overall effectiveness of online advertising on offline sales, Darjeeling needed to combine all this data into a single system.

To achieve their goals, Darjeeling’s marketers decided to:

Collect data about online sessions, offline sales, and order completion rates.

Combine data about offline sales and user behavior on the website, taking into account order completion rates.

Create reports and dashboards based on the collected data to evaluate the impact of online advertising on offline sales.

1. Collect data

Darjeeling imported data about user behavior on the website to Google BigQuery using OWOX BI Pipeline (our proprietary connector). They also sent transaction data from their CRM to Google BigQuery. This helped Darjeeling marketers calculate the order completion rate and avoid possible data losses (such as those that can happen if JavaScript isn’t loaded in the browser).

Darjeeling imported data about completed orders to Google Cloud Storage on a daily basis, and our analysts created a script to help them collect this data from Google Cloud Platform and import it into Google BigQuery.

Now, this data is automatically imported with the Cloud Dataprep service by Trifecta. Here’s the data flow:

2. Combine data

Darjeeling marketers combined data about offline sales and user behavior on the website while considering order completion rates.

To merge data about online sessions and order completion rates into a single view, Darjeeling used the user_id assigned to each user who signed in to the company’s website. The user_id is linked to the number of the customer’s loyalty card and stored in the CRM.

When a user visits the website, their user_id is sent to Google Analytics and Google BigQuery as a custom dimension. In BigQuery, it’s combined with two other keys: transaction_id and time.

Data about all completed orders is stored in Google BigQuery in the following structure:

This data was combined by Darjeeling analysts as follows:

Analysts looked at the transaction_id, user_id, and time keys from the table.

They selected data from online interactions in which orders were completed before the selected date.

They identified the channel groupings for the sessions that were closest in time to the transaction date.

The result was this table:

According to Darjeeling’s research of the customer journey, it takes up to 90 days for visitors to make a purchase decision after visiting the website.

Using this data, we calculated the number of days between the initial website visit and the purchase for each order. The results were grouped into segments of 7, 10, 14, 30, and 60+ days. This analysis revealed that 85% of all ROPO purchases of Darjeeling products were made within 14 days.

3. Create reports and dashboards

Finally, Darjeeling marketers created reports and dashboards based on the collected data to evaluate the impact of online advertising on offline sales.

Overall, the project helped Darjeeling’s marketers identify more users and establish that 30—40% of customers visit the company’s website before buying offline. That data, in turn, helped them optimize their ad budget and justify greater investment in online advertising.

Ile de Beaute case study

The Ile de Beaute chain of stores is part of Sephora and occupies a leading position in the global market for perfumes and cosmetics. The Ile de Beaute marketing team wanted to understand the interactions between online and offline behavior of their users.

To accomplish this, they did the following:

Chose a single repository for merging data. Ile de Beaute chose Google Cloud Storage for its connection to Google BigQuery.

Automated data flows. Ile de Beaute used OWOX BI Pipeline to send customer behavior data from Google Analytics to Google BigQuery. They also set up automatic data uploads from Google Ads to Google Analytics as well as expense data to Google Analytics from Yandex.Direct, Yandex.Market, VKontakte, Criteo, Facebook, and other advertising sources. Finally, they set up a connector to send data from their CRM to Google BigQuery.

Built reports for company management. Using SQL queries, the Ile de Beaute team combined all data collected in BigQuery into a single table. They use this data to build reports in a company-friendly format with Google Data Studio.

This is what their infrastructure looks like:

After analyzing the data, the team at Ile de Beaute was able to show hard data about the influence of digital media advertising on offline sales.

Step 4: Verify data quality

You can start with a simple Google Analytics setup audit and take it from there. Make sure your UTM tagging and other taxonomies are done correctly and that you have a userID field to track customers across devices.

Also, take time to implement a proper measurement plan. This point is usually underestimated. But if you have sh** going in, you won’t have gold coming out.

Reliable data requires the right people with the right knowledge—plus sufficient attention, time, and perseverance. But if you succeed, the payback is massive.

Step 5: Ensure data literacy

Does your team have the necessary level of data literacy? Does everyone understand the metrics in the same way? Do you know how to use the tools and understand the statistics that answer business questions?

If not, plan activities (trainings, workshops, etc.) and create documentation to increase your team’s data literacy. Your data culture directly reflects your company’s values, and you can always improve it. But to do so, you need people devoted to advocacy and tools that make self-service easy.

Ask yourself the following questions:

Are employees as curious and motivated as they should be when it comes to using data? Why is that?

What can I do to correct the negative and amplify the positive?

Does the percentage of employees who use our self-service analytics tools match my expectations?

Step 6: Build dashboards

If everything else is in place, you can move to the reporting stage. Remember that there should be no calculated metrics on dashboards—you shouldn’t be blending or manipulating data. Don’t try to make your visualization tool a computing one.

All calculations have to be done beforehand and stored in a data set that your dashboard refers to. Otherwise, you’ll run into a massive governance problem.

Separating computing and visualization makes it much easier to keep version control under control and manage at scale. Trust me: it will save you hours of debugging when the numbers go wrong.

It’s good practice to do a little homework before building your dashboard:

Define the essential metrics that will answer your questions.

Ensure that KPI calculation logic is extremely transparent and approved by the team.

Create a prototype on paper (our preference) or with the help of prototyping tools to check the logic.

Step 7: Act on the results

Remember the hypotheses you based your questions on at the very beginning? Now you have the answers—act accordingly. The most beautiful dashboard won’t change your business unless you act on the numbers it shows you.

Step 8: Support the system

To make sure people actually use your system, make sure it’s reliable, allows for collaboration, and properly integrates new data flows.

June Dershewitz, Head of Data Strategy at Amazon Music and former Director of Analytics at Twitch, shared the taxonomies that Twitch uses to make self-service analytics happen:

Interfaces. Data should be easy for everyone to work with.

Aggregates. Data should be clean, efficient, and organized.

Data catalog. Data and metadata should be discoverable.

To check if self-service analytics are working, Twitch runs quarterly data satisfaction surveys and asks their employees:

Do you have the data you need to do your job effectively?

Are you able to get data in a timeframe that meets your business needs?

If most of your team answers “No” to one of these questions, it means your self-service analytics aren’t working.

Conclusion

Multichannel attribution that includes online and offline purchases is complex—it is the “unmeasurable.” Or so it would seem. No attribution model will provide perfect data, but a decent one can absolutely offer directional data.

Here are some keys to keep in mind:

Don’t try to measure everything.

Ask questions you’re ready to act on.

Start small and move iteratively.

If you work for a multichannel business, rethink your attribution strategy and consider ROPO while evaluating ROAS.

Build your own attribution approach considering the order completion rate and offline sales.

Create lead magnets to identify users and increase the authorization rate.

Take time to list data sources and lay out the data flow.

Invest in developing your data culture and data literacy to make sure the team is data-driven.

Read more: feedproxy.google.com

(

(